My Top 5 Wishes for WWDC 2023

WWDC is only weeks away, so it's time for me to update my wishlist. One wish came true last year, how many will it be in 2023?

I wrote an article with the exact same purpose last year already for WWDC 2022, listing my top 3 wishes. Thankfully, one of them (wish #3) was actually fulfilled, the Xcode 14 Release Notes explain the related new feature like this:

Simplify an app icon with a single 1024x1024 image that is automatically resized for its target. Choose the Single Size option in the app icon’s Attributes inspector in the asset catalog. You can still override individual sizes with the All Sizes option. (18475136) (FB5503050)

My other two wishes still stand true and remain at the top of my wishlist:

#1: A new Swift-only database framework to replace CoreData in the future. I outlined how I imagine such a framework could work here, read that for details.

#2: App modularization support within Xcode. Currently, I have to maintain a long Package.swift file manually to modularize my app, read this for more.

With these out of the way, here are 3 new wishes I have for this year's WWDC.

#3: Circular charts in the Swift Charts library

At the end of my last year's article, I stated:

In the past, I was always surprised by at least one or two frameworks entirely, like SwiftUI in 2019, WidgetKit in 2020, and DocC in 2021. What will it be this year? I can’t wait to find out!

Well, it was Swift Charts, for sure! It's the new shiny library that maybe not every app needs immediately, but I'm sure will inspire many developers to visualize some of their app's data to give users a better overview of their contributed data. It's SwiftUI-only, so not all teams have had the opportunity to make use of it yet.

The first version of the Charts library shipped with a good amount of chart types that all have in common that they need only one or two straight axes. For example, you can already draw (and customize!) line charts, bar charts, and even heat maps. Jordi Bruin has put together a GitHub repo with screenshots & source code that can give you a good overview of what's already possible today.

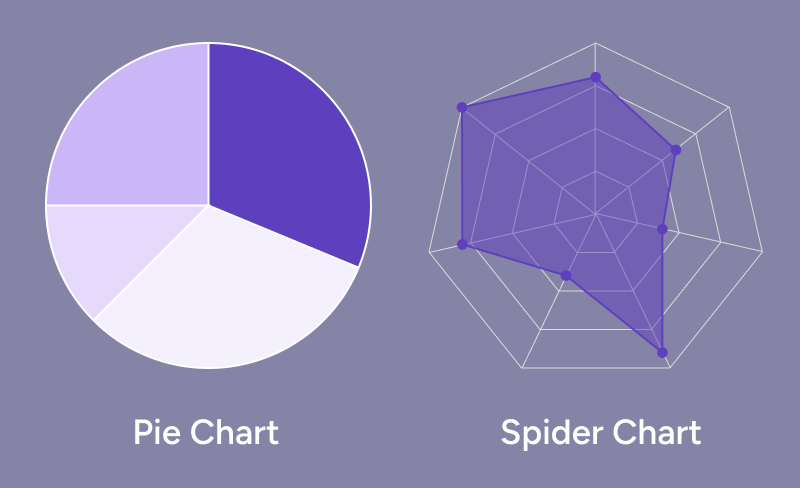

But when I tried using the Swift Charts library myself for my open-source app Open Focus Timer that I develop during my live streams on Twitch, I wanted to show users a pie chart of how their work time is split across different projects. But pie charts are currently not supported by the Charts library, nor are spider charts, doughnut charts or sunburst charts. But I think they're so common, that they really should all be added to a second version of the Charts library this year.

And next year, maybe tree-like charts (or diagrams) could be added as well, which could help create professional tools, e.g. for drawing a UML diagram of an apps model layer, for visualizing data structures like B-trees, or for building interactive state machines that could help understand features on a more abstract level. I have enough ideas that could make great use of such charts or diagrams!

#4: Streamer Mode in Xcode to redact code

Sharing content is a great thing. It not only helps others who consume your shared content to learn something new or to get inspired by it. Their feedback can also help the content creator to discover new aspects they haven't thought about yet, such as subtle bugs, UX issues, or lack of accessibility support. But if the content to be shared is related to a coding project, security & secrecy concerns come into play.

For example, when sharing an open-source framework with the community that integrates with 3rd party services, we have to ensure no testing credentials are ever committed to the repository or else they might leak and get abused. In fact, that example is exactly the situation I ran into with SwiftPM for my open-source tool BartyCrouch, I explained how I solved it in this article.

And secrets aren't the only part we want to hide from others: No company would want the core value of their products that took a lot of effort to figure out to leak to a potential competitor. Copycats are a serious problem that can destroy businesses. The solution for Git repositories is simple enough: Valuable code that shouldn't leak needs to be kept closed-source and never shared with outsiders.

But hiding secrets from a Git repository or keeping parts of the codebase private is only part of the story. What about video calls with friends or strangers that want to help us? What about Indie developers (like me) who want to live stream their work as much as possible? There are so many situations where I would love to simply share my screen and show a particular problem or solution right within the context of my real-world application, but I don't. The risk of accidentally leaking sensitive code is just too high for me. This leads to many missed potentials:

- As an Indie developer, I would love to even live-stream the development of updates to my closed-source apps, but there are parts I don't want to leak. So, all I do is to live-stream my open-source work. That's a big bummer.

- As a framework user, I would love to report bugs or feature requests by quickly recording my screen and sharing a video of the in-context usage. Currently, I often times skip reporting anything because I find it too cumbersome to prepare a demo. Or I copy parts of code from the context and then have to answer several questions to explain why I'm doing it exactly the way I do.

- As a participant in a weekly developer exchange call, I prefer to just talk about code on an abstract level if I run into problems rather than sharing my screen and showing it in detail. And even if I do share my screen, I would never allow my counterpart to control my screen (e.g. to quickly show me something). I know, part of the problem is that I'm having trust issues, but I'm not alone.

So, what I wish for is a feature within Xcode to mark specific parts of my codebase as "confidential" and when I share my screen, all confidential parts would be hidden from the shared screen. Such a feature is commonly referred to as "Streamer mode" in apps like Discord. And it could be implemented in a variety of ways, here's how I imagine it to work:

- The confidential content could stay visible for the streamer but be highlighted by Xcode so the streamer is aware that it won't appear in any shared content.

- The marking of content as "confidential" could happen on different levels, such as on a file level (all contents of a file are redacted), on a type level, on a function level, or on a variable level. In the latter, the entire lines would be redacted.

- The names of redacted content could be still shown (file name / type name / function declaration / variable name) and only their bodies/values redacted.

- The names of all contents marked as "confidential" could be locked from editing to ensure that renaming them doesn't temporarily make their contents leak.

- The confidential markings could be stored in a file that can be committed to Git so other developers in a team could profit from them in their outside calls, too.

- Streamer mode could be automatically enabled when any app is currently making use of the screen-capturing APIs so the developer wouldn't have to remember to turn on "Streamer mode" manually. This should make it work for both video calls (in FaceTime/Zoom etc.) and screen recordings (in OBS/QuickTime etc.).

I'm not very optimistic about such a feature making its way into Xcode as I feel like this is not a problem commonly stated by many developers. It feels like a niche issue. But in my opinion, it's one of those features you don't know you were missing until you got used to them and then you'd never want to miss them again.

#5: AR/VR OS development with an iPhone

Tim Cook has been publicly talking about the potential of AR since as early as 2016. Assuming he wouldn't talk about something Apple hasn't researched at least for a whole year already, we can safely say that Apple is working on AR products for at least 8 years. The first iPhone took two and a half years to develop. The first Apple Watch took three years of development. So while one could ask, why we still haven't gotten Apple's first AR device then, I'm going to simply assume we will get one this year. And I will also assume that this new device will ship with its own operating system, let's call it "AR/VR OS".

I can already foresee a problem for developers when such a device gets announced: Not every developer will be able to purchase a new device right away. Some due to financial restrictions, some because first gen products tend to not be available in all countries all at once. In the past, this was not a big issue. There are simulators for the iPhone, the iPad, the Apple Watch, and even the Apple TV that ship with Xcode. While there are some restrictions (like no camera support), most apps can be fully developed and for the most part, even tested on these without any issues. That's because all these devices have one thing in common with our development device, the Mac: They all render a virtual interface on a flat screen.

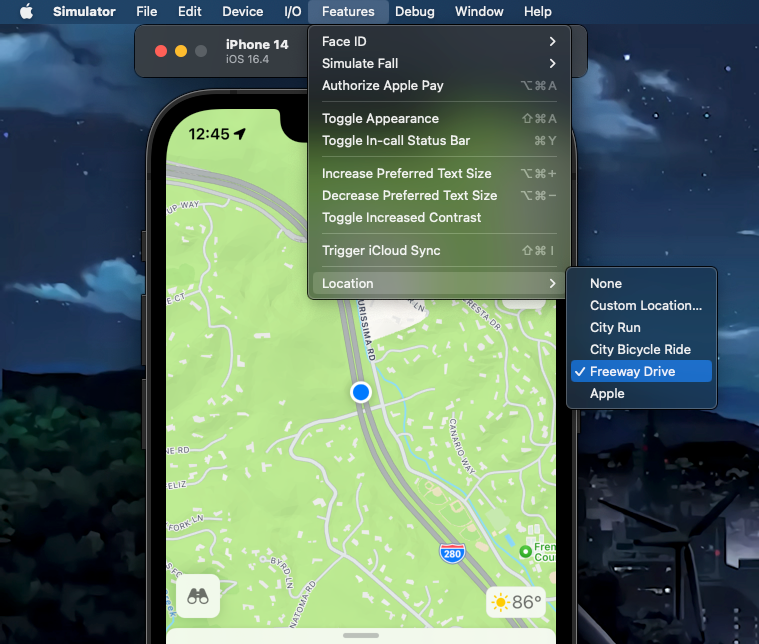

But an AR device is different: By its very definition, it "augments reality", which means that in order to develop and test any useful app properly, we need to have access to a camera feed so we have something to "augment". While Apple could provide some sample virtual environments for testing stuff in an AR/VR simulator, as they did with the location simulation, this would be very limiting for many use cases of apps Apple didn't cover and can also be annoying to work with.

What I wish for instead is for Apple to provide developers with a way of testing AR/VR OS development using the camera feed of a connected iPhone or iPad. This could be restricted to devices with a LiDAR scanner if that's a technical requirement. But it should also work wirelessly as we will want to walk around with it to test our features in different areas of the world. The basic technology for that was already shipped in the form of Continuity Camera which allows using an iPhone as a webcam. Maybe that feature was just a by-product of said testing functionalities originally built for the internal team that was working on the AR product in the first place. Who knows? This could be a sign that it will come.

But more importantly, why I'm optimistic that we will get some way of testing for AR/VR OS without the real device is that Apple has an interest in as many apps as possible to support the device. And that means it has to be as easy as possible to develop applications for it. After all, the availability of (unique) apps is an important selling point of any new software platform.

What about the AI hype?

While I do wish for better auto-completion support in Xcode or even a virtual coding assistant of some sort that I can tell what I want and it writes the code to meet my criteria so I don't have to type out all the code myself, I don't think we're there yet. I did play around with ChatGPT, but it got things wrong 80% of the time when I asked for code. It not only used deprecated APIs all the time, but it also produced code that didn't build. It often didn't even understand what I wanted.

While a dedicated model for coding from Apple might lead to better results, I don't think it will come close to what I would consider "reliable" yet. And I'm sure Apple knows this. They might explore such a feature in the future, but I hope they won't jump on the current hype train and try to build something half-baked into Xcode. I prefer tools that I can rely on, and that are predictable. I think that Apple does that, too. But the technology isn't there yet. I expect something big in this direction next year at the earliest, but only something tiny if anything at all this year.

Conclusion

So, these are my top 5 wishes for WWDC 2023. Do you agree with me? And what are yours? Let me know by commenting on Twitter here or on Mastodon there.

A native Mac app that integrates with Xcode to help translate your app.

Get it now to save time during development & make localization easy.